Reverse Proxies for Corporate Networks: Load Balancing, Caching & Security in 2025

The article content

- Introduction: why a reverse proxy still matters in 2025

- How a reverse proxy works: the basics

- Load balancing: algorithms and use cases

- Caching at the reverse proxy: speeding up content delivery

- Server-level protection: waf, tls termination and rate limiting

- Use cases: real-world scenarios and architectural solutions

- Integration with observability and logging tools

- Practical tips for configuration and operations

- Architectural patterns: when to use edge proxy vs internal proxy

- Readiness checklist: before you go live

- Pitfalls and gotchas: what to watch out for

- Software and cloud options, briefly

- Conclusion: reverse proxy as a strategic choice in 2025

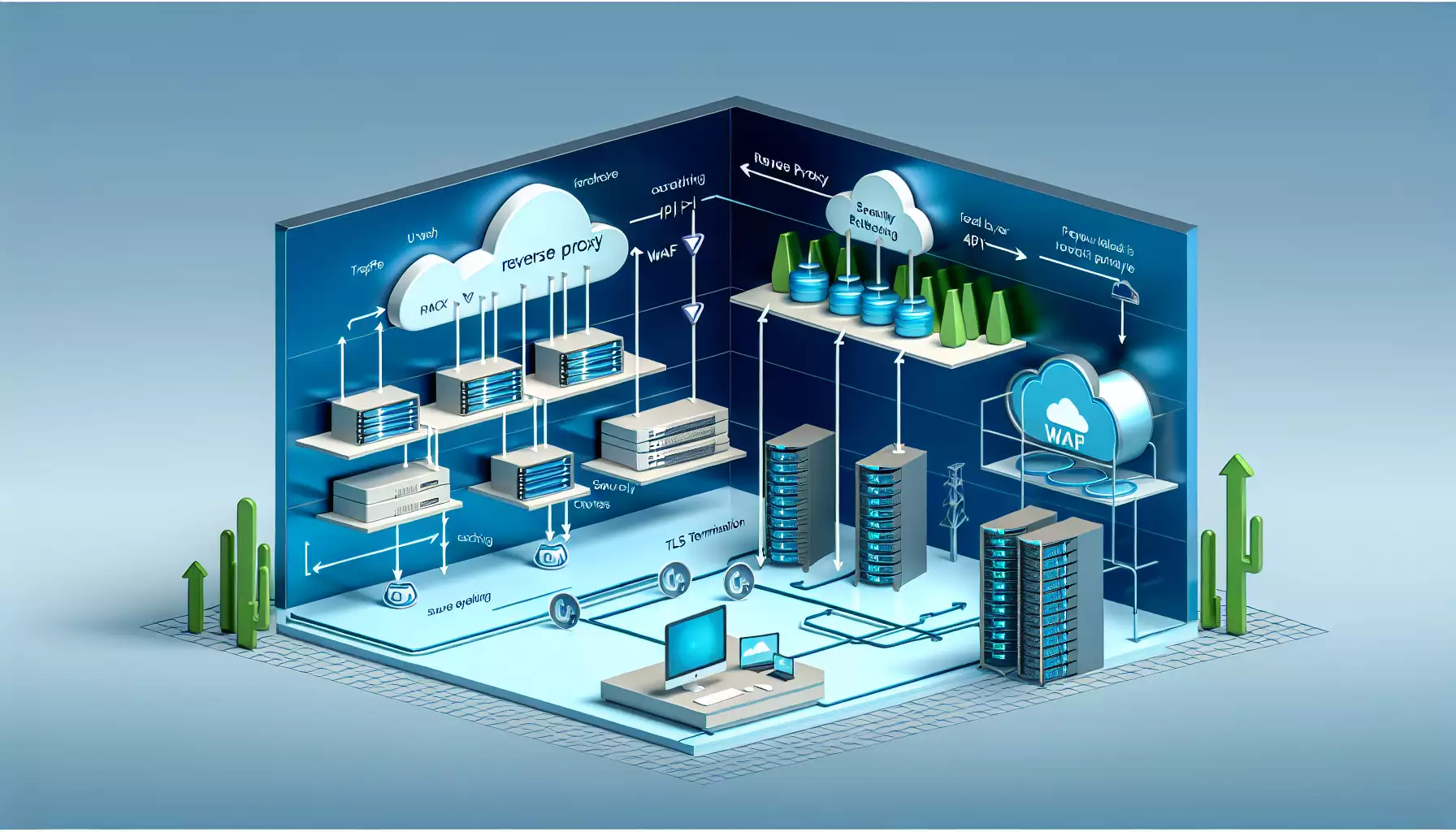

Introduction: Why a Reverse Proxy Still Matters in 2025

Think of a reverse proxy as the doorman to a large office complex: it welcomes requests, checks credentials, directs people to the right floor, holds back unnecessary traffic, and flags suspicious visitors to security. As corporate networks keep spreading across hybrid clouds in 2025, that doorman role only becomes more important. I’ll explain, in plain language and with concrete mental images, how reverse proxies help balance load, cache content, and shield servers from external threats.

How a Reverse Proxy Works: The Basics

A reverse proxy accepts client requests and communicates with internal servers on their behalf. Clients don’t see the internal layout — they see only the proxy. It’s like a reception desk that answers calls and forwards them to the right person. Technically, a proxy can terminate HTTPS, modify HTTP headers, route requests by URL and query parameters, enforce caching and security policies, and collect metrics and logs for later analysis.

Modern reverse proxies implement key features such as TLS termination, authentication token validation, load balancing, caching of static and dynamic content, response compression, request deduplication, rate limiting, and integration with WAFs and intrusion detection systems. In 2025, these features are standard in many corporate architectures.

Load Balancing: Algorithms and Use Cases

Load balancing is one of the most basic — and most valuable — functions of a reverse proxy. What does that mean in practice? In short: distribute work evenly across available servers so none gets overloaded and users enjoy predictable response times.

Simple algorithms: round-robin and least connections

Round-robin is like handing out tickets: each incoming request goes to the next server on the list. Its simplicity makes it great for homogeneous backends. Least connections sends the request to the server with the fewest active connections — handy for workloads with long-lived sessions.

Smarter algorithms: weighted, least response time, hashing and peer-aware

Weighted algorithms account for differences in server capacity: a more powerful machine gets a larger share of traffic. Least response time chooses the server with the lowest average latency — useful in heterogeneous environments or when external dependencies vary. Hash-based routing (like consistent hashing) directs the same user to the same node, which helps with caching and session affinity. Peer-aware algorithms use info from neighboring proxies in a cluster to make smarter distribution decisions.

Sticky sessions and their cost

Sometimes you need a user’s session to stick to one server — for example, when session state lives in memory rather than a shared store. Sticky sessions solve this, but they reduce balancing flexibility and complicate scaling. In 2025, it’s better to design apps to be stateless or store session data in a distributed store. When that’s impossible, sticky sessions remain a practical tool.

Backend health: health checks and automatic removal

Reliable balancing needs regular health checks: ping specific URLs, measure response time, verify database connectivity. Modern proxies can automatically remove unhealthy nodes from the pool and reintroduce them once they recover. That reduces packet loss and lowers the manual overhead for administrators.

Caching at the Reverse Proxy: Speeding Up Content Delivery

Caching is a performance lever that often feels like magic. Why? Because users frequently request the same resources: logos, JS bundles, styles, images, and recurring API responses. Caching at the reverse proxy serves content without hitting slow or overloaded backends.

Types of caching: static and dynamic

Static caching handles immutable assets like images, fonts, and static HTML. Dynamic caching stores rendered fragments or API responses — trickier because you must manage cache invalidation and privacy, but the latency gains can be huge.

Caching policies: Cache-Control, ETag, stale-while-revalidate

A reverse proxy respects Cache-Control, Expires, and ETag headers but can override them if needed. The stale-while-revalidate pattern returns an older-but-acceptable response while the proxy refreshes the cache asynchronously. That approach improves UX during peak load.

Caching APIs: when is it safe?

Caching API responses requires caution: consider query parameters, authentication, and personalization. Often you cache idempotent GET requests with explicit TTLs. For personalized responses you can use more advanced patterns: Vary headers, cache keys that include tokens, and event-driven invalidation.

Varnish, NGINX and built-in caches: which to choose?

Varnish is famous for high-performance HTTP caching and flexible VCL logic. NGINX offers a built-in proxy_cache that’s easy to configure and supports many scenarios. Envoy and commercial solutions provide distributed caches and service-mesh integration. Choose based on architecture and scaling needs: Varnish for high-performance front-ends, NGINX for versatility and simplicity, Envoy for cloud-native and microservices environments.

Server-Level Protection: WAF, TLS Termination and Rate Limiting

The reverse proxy is a natural place to deploy protection because it sees all incoming traffic first. In 2025, defense should be layered: block known threats at the proxy, coordinate with network devices, and monitor in real time.

TLS termination and HTTP/2, HTTP/3

Terminating TLS at the proxy offloads encryption work from backends. The proxy can also speak HTTP/2 and HTTP/3 to speed up multiplexing and reduce latency. Automated certificate renewal via ACME/Let’s Encrypt or integration with corporate PKI is standard practice today.

WAF: rules, signatures and false positives

A Web Application Firewall filters malicious requests, blocking injections, XSS, and other application-layer attacks. A good practice is to run the WAF in monitoring mode first to collect data and reduce false positives, then switch to blocking. Centralizing WAF rules on the proxy simplifies management.

Rate limiting and IP reputation

Rate limiting defends against brute force and small-scale DDoS attacks. Modern proxies can apply limits by IP, by access token, by header, or even across a global cluster. IP reputation scoring lets you downgrade trust for suspicious addresses proactively.

Bot management and behavioral analytics

Bots are getting smarter. Detect them with a mix of behavioral analytics, challenge-response (CAPTCHAs, JavaScript checks), and client profiling based on fingerprints and request speed. Integrate these controls at the proxy for fast, automated responses.

Use Cases: Real-World Scenarios and Architectural Solutions

Now the interesting part — how reverse proxies are used in the real world. Below are scenarios you’re likely to see now or soon.

1. Speeding up websites with caching and compression

Classic case: you run a public website with heavy traffic. Adding a reverse proxy with caching and compression (gzip or Brotli) serves static resources from memory, reduces backend calls, and saves bandwidth. In 2025, adaptive caching is also common: cache behavior varies by Accept-Encoding, geography, and A/B test parameters.

2. Balancing microservices in Kubernetes

In Kubernetes, reverse proxies act as Ingress controllers or sidecar proxies in a service mesh. Envoy, Traefik, and Kong can discover services dynamically, update routes, and integrate with CI/CD. That makes scaling smooth without manual reconfiguration.

3. TLS termination and centralized certificate management

Keeping certificates in one place is more manageable. A reverse proxy handles TLS, renews certificates automatically, and forwards traffic into the private network either unencrypted or re-encrypted with internal certificates.

4. Protecting an API gateway

APIs are frequent attack targets. A reverse proxy as an API gateway enforces authentication, validates tokens, throttles requests, and logs traffic. It can also aggregate responses from multiple services to reduce the client’s outbound calls.

5. Edge caching and geo-distribution

If your audience is global, edge proxies cache content close to users to lower latency. Combined with a CDN, proxies control caching policies while the CDN ensures global delivery. In 2025, a hybrid approach — proxy plus CDN — is especially important for media-heavy services.

6. Application-level DDoS protection

Network defenses handle crude traffic floods, while proxies deal with application-layer attacks: rate limiting, challenge-response, IP blocking, and automated rules. Key practices include anomaly detection and automated throttling: slow responses, enable caching for suspicious queries, and alert operators.

Integration with Observability and Logging Tools

No security system works without monitoring. A reverse proxy should emit metrics, logs, and traces: request counts, 5xx errors, latency, response sizes, cache hit/miss, and more. Feed this data to SIEM, Prometheus, Grafana, and APM tools.

What to log and why

Access logs reveal user and attacker behavior. Error logs show backend failures. WAF logs highlight attack attempts. End-to-end tracing from proxy to backend helps debug latency. Centralize and analyze all of this with automated rules.

Key metrics to watch

- requests per second and concurrent connections;

- average and P95/P99 latency;

- cache hit ratio;

- rate of 4xx/5xx errors;

- number of WAF blocks and rate-limiting events;

- CPU and memory usage on the proxy.

Practical Tips for Configuration and Operations

Here’s an honest, practical list of tips I’d give teams in 2025 so the reverse proxy helps instead of becoming a bottleneck.

1. Start simple and expand gradually

Don’t try to enable dozens of WAF rules, caching policies, and complex routes at once. Start with basic load balancing, TLS, and logging, collect metrics, and add features as needed.

2. Use a separate proxy cluster for public traffic

Separating public and internal traffic reduces risk. A public proxy cluster focuses on protection and scaling; an internal cluster focuses on availability and observability.

3. Test security rules in staging

Before switching a WAF to blocking mode, run it in monitoring on staging, fine-tune rules, and only then enable blocking. That prevents accidental denial of service for legitimate users.

4. Plan cache invalidation and release workflows

Cache is powerful, but without proper invalidation it serves stale content. Use webhooks, CI/CD events, or manual commands to invalidate cache during releases.

5. Ensure auto-scaling and redundancy

The proxy is critical. Implement horizontal scaling, health checks, self-healing, and backup configuration sets. Test recovery and failover under real load.

6. Document configurations and rules

When you have dozens of rules and cache patterns, lack of documentation quickly becomes chaos. Document why a rule exists, who owns it, and how to test changes.

Architectural Patterns: When to Use Edge Proxy vs Internal Proxy

Splitting proxy roles helps manage risk and scale services. An edge proxy handles internet traffic: CDN integration, TLS, WAF, and rate limiting. An internal proxy operates inside the perimeter: microservice routing, policy enforcement, and observation.

Pattern: Single Entry Point

One external proxy in front of all services is simple and makes certificate and security rule management easy. The downside is a single point of failure unless you provision HA and replication.

Pattern: Multi-Tier Proxy

Multiple proxy layers — an edge layer for protection and caching, an internal layer for microservice balancing, and sidecar proxies for the service mesh — provide flexibility and separation of responsibilities.

Pattern: Service Mesh + External Proxy

Sidecar proxies (e.g., Envoy) in the mesh handle inter-service security and telemetry, while an external reverse proxy deals with TLS termination and border protection. This is a modern, practical pattern for large distributed systems.

Readiness Checklist: Before You Go Live

Here’s a compact checklist to run through before putting a reverse proxy into production. It’s simple, but it buys you calmer nights.

- TLS termination and automated certificate renewal configured;

- health checks for backends enabled;

- cache policy and invalidation mechanism verified;

- WAF configured and tested in monitoring mode;

- rate limiting and brute-force protection implemented;

- metrics and centralized logs going to SIEM/Prometheus;

- horizontal scaling and high availability ensured;

- load and failure scenario testing completed;

- configuration rules, owners, and recovery procedures documented.

Pitfalls and Gotchas: What to Watch Out For

Even with a solid architecture, mistakes happen. Here are common pitfalls teams often miss.

Resilience without observability is a myth

If you have auto-scaling but no visibility, you won’t know why problems happen. Metrics and alerts aren’t decorative — they’re how you respond fast.

Caching personalized content is risky

If you cache personalized pages incorrectly, users may see someone else’s data. Always validate Vary headers and use unique cache keys tied to sessions or tokens.

Overzealous WAF rules kill UX

A too-aggressive WAF can block legitimate actions. Test rules in staging, use allowlists, and adopt adaptive policies.

Compression can be costly on old servers

Older or weaker backends may struggle with encryption and compression. Terminating TLS at the proxy helps, but internal encryption still matters for security.

Software and Cloud Options, Briefly

Choosing between NGINX, HAProxy, Varnish, Envoy, commercial gateways, and cloud providers depends on scaling, flexibility, and integration needs. NGINX is versatile, HAProxy is rock-solid for L4/L7, Varnish excels at caching, and Envoy fits cloud-native and service-mesh patterns. Commercial gateways often bundle security features and a user-friendly UI.

Cloud providers offer managed load balancers and WAFs that reduce operational effort but require trust in the provider and can be costly at very high scale.

Conclusion: Reverse Proxy as a Strategic Choice in 2025

A reverse proxy is more than an intermediary. It’s a strategic node that boosts performance, increases architectural flexibility, and protects apps at the network edge. In 2025, with distributed architectures and evolving threats, a well-tuned proxy is essential for corporate security and optimization. Start with small, effective steps — TLS termination, basic load balancing, and logging — and then add caching, WAF, and observability. Document decisions, test in staging, and monitor metrics — then your proxy will behave like a reliable doorman at the entrance to your digital office.

FAQ

- Which is better for caching: Varnish or NGINX?

Varnish is great for high-performance HTTP caching and gives extensive control via VCL. NGINX is easier to integrate as a general-purpose solution when you need both load balancing and caching in one place.

- Can you trust a WAF completely?

No — a WAF is a vital layer, but not a silver bullet. Use it alongside rate limiting, network defenses, and incident monitoring.

- How should personalized APIs be handled when caching?

Cache only non-personal parts, or use cache keys that include user identifiers and Vary headers. Event-driven invalidation is also a powerful tool.

- Is a separate proxy cluster needed for the edge?

Yes — separating edge and internal proxies improves security and manageability, especially in hybrid or multi-cloud environments.

- How do you test WAF rules before enabling them in production?

Run the WAF in monitoring mode on staging or limited traffic, collect logs to analyze false positives, tune rules, and then progressively enable blocking.